Enterprise‑Grade ETL for High‑Volume, Mission‑Critical Workloads

IBM InfoSphere DataStage by AMSYS provides a scalable, parallel ETL platform optimized for complex enterprise environments. AMSYS delivers complete DataStage solutions—architecture, migration, tuning, and 24/7 support—ensuring your data pipelines run reliably at scale.

What is IBM InfoSphere DataStage?

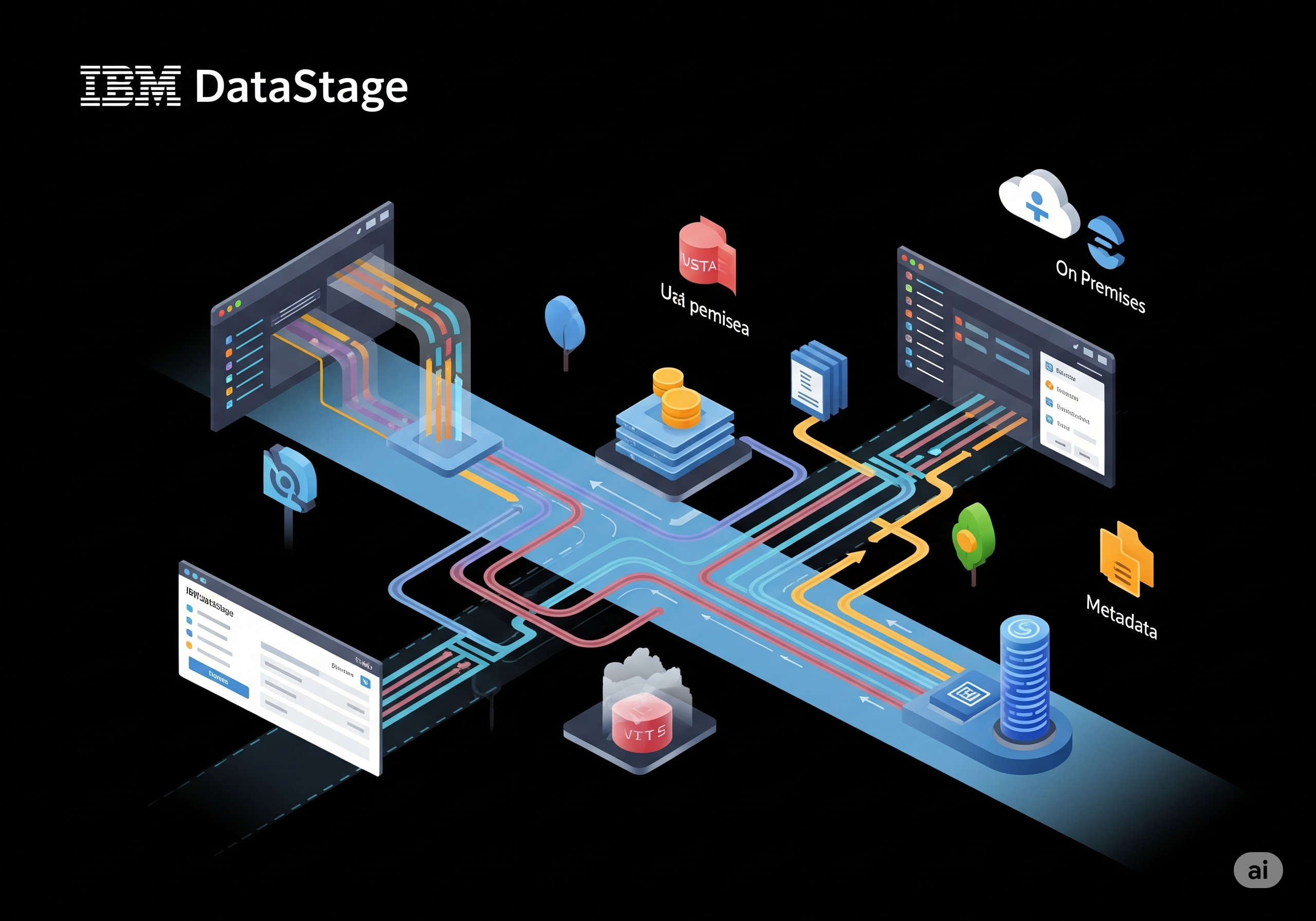

IBM InfoSphere DataStage is a leading ETL tool that enables organizations to design, develop, and run high‑performance data integration jobs. AMSYS leverages DataStage’s parallel processing engine, graphical job designer, and metadata framework to build resilient pipelines across on‑premises, cloud, and hybrid landscapes.

Overcome bottlenecks, complexity, and governance gaps with AMSYS expertise.

Processing terabytes daily with legacy ETL causes long windows and missed SLAs.

Mainframes, relational databases, and cloud services require unified integration.

Implementing sophisticated business rules across datasets demands scalable tooling.

Ensuring job success and automated recovery at scale is critical for operations.

Tracking lineage, impact analysis, and auditing across jobs is challenging without a central framework.

Powerful capabilities for enterprise‑scale ETL and data governance.

Multi‑threaded engine scales across cores and nodes for high throughput.

Drag‑and‑drop interface accelerates development and simplifies maintenance.

Support for scheduled batch jobs and low‑latency change Data Capture streams.

Out‑of‑the‑box adapters for mainframes, databases, Hadoop, and cloud sources.

Deliver faster, more reliable insights with proven enterprise ETL.

Structured approach to deploy, migrate, and optimize DataStage at scale.

Analyze existing ETL jobs and define a roadmap for migration or modernization.

Design high‑availability, parallel environments on‑premises or in cloud.

Automate job conversion and data validation to move workloads smoothly.

24/7 AMSYS monitoring, troubleshooting, and continuous improvement services.

Key guidelines to ensure performance, reliability, and governance.

Choose round‑robin, hash, or range partitioning based on data distribution.

Leverage the metadata repository for impact analysis and audit trails.

Build parameterized job templates and shared routines to accelerate development.

Integrate with monitoring tools and set alerts on job failures and performance bottlenecks.

Review job metrics regularly and refine configurations for evolving data volumes.

High‑speed ETL for any enterprise data source.

Efficiently handle full data loads and upserts with change data capture support.

Connect seamlessly to on‑prem databases, cloud warehouses, and mainframes.

Automate and control complex ETL workflows.

Define dependencies, triggers, and event‑driven schedules in one console.

Run multiple jobs concurrently with resource‑aware coordination.

Implement retry logic, error notifications, and automated restarts.

Chain jobs based on success, failure, or custom conditions.

Capture detailed runtime logs and audit trails for compliance.

Low‑latency pipelines for mission‑critical data needs.

Complete visibility and control over your ETL ecosystem.

Central Metadata Repository

Store job definitions, schemas, and annotations in one place.

End‑to‑End Lineage

Trace data flows from source to target across all jobs.

Impact Analysis

Assess downstream effects before making changes to jobs or schemas.

Business Glossary

Enrich metadata with business terms for clear communication.

Audit & Compliance

Generate reports on job usage, changes, and data access for regulators.